Our Technology

Temi Robot

A smart robot for smarter research.

Temi lets us explore how people interact with intelligent systems in real-world environments, bringing human-robot interaction to life.

- Ideal for studying public engagement, automated service delivery, and digital trust

- Guide participants, deliver information, or collect real-time responses hands-free

- Observe behaviour in motion across labs, public spaces, and service environments

We use Temi to understand how people respond to robot-led communication, navigation, and simulated customer service. Whether in government offices, retail settings, or business contexts, Temi helps us study the future of automation—capturing how humans engage with technology in the moment.

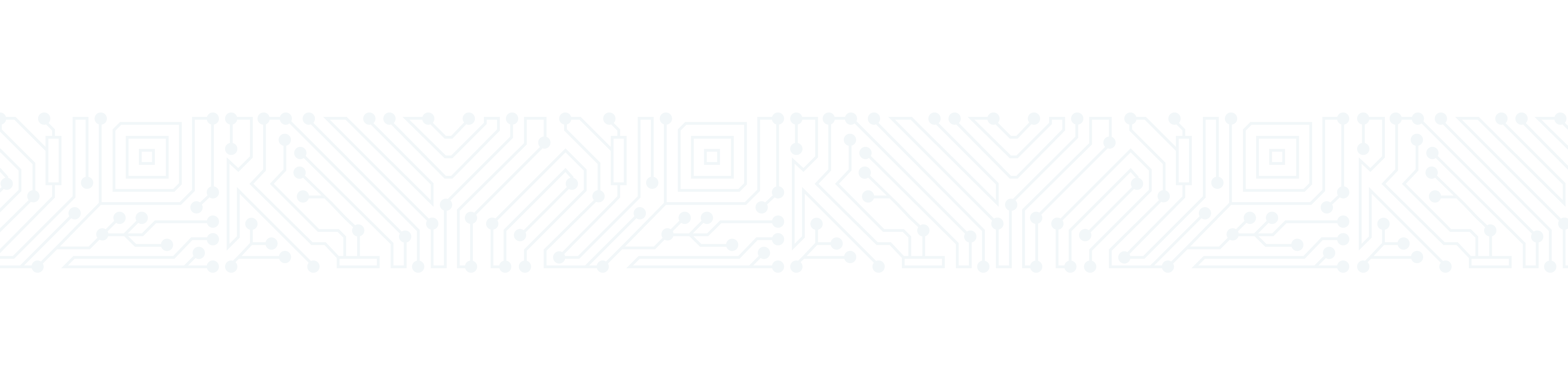

Eye Tracking Screen-Based

See what captures attention, pixel by pixel.

Our screen-based eye tracking technology reveals exactly how users engage with digital content, interfaces, and messaging—capturing real-time visual attention with precision.

- Ideal for web testing, marketing research, and cognitive studies

- Track gaze, fixations, and attention patterns with high accuracy

- Uncover subconscious behavior to refine design, messaging, and usability

We use wireless, non-intrusive systems that work seamlessly in both lab and field settings. Whether you’re testing digital ads, government communications, or political messaging, we help you understand what truly draws the eye—and why.

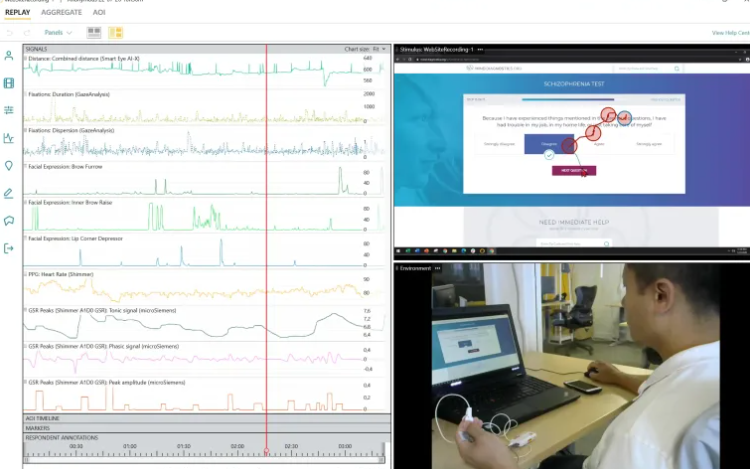

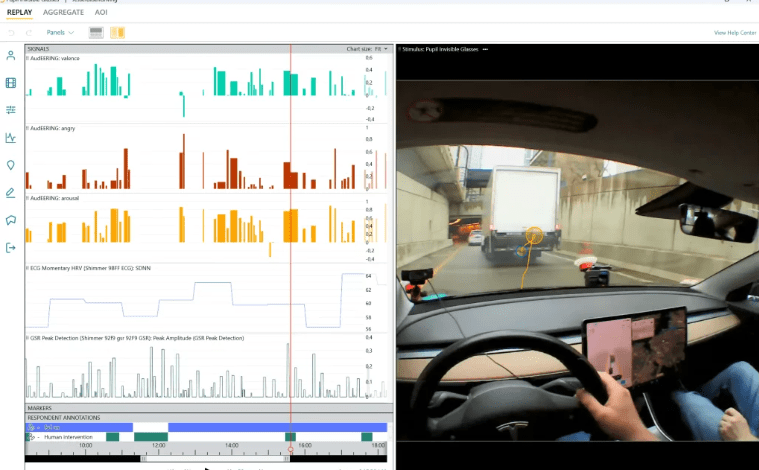

Eye Tracking Glasses

See the world through their eyes.

Our eye tracking glasses capture exactly where participants look as they move, interact, and make decisions in real-world environments.

- Ideal for field studies, service design, and behaviour mapping in complex settings

- Measure visual attention to optimise spatial layout, user journeys, and decision-making

- Record gaze data unobtrusively in offices, retail spaces, or public environments

Our Lab uses wireless, lightweight glasses that let participants move naturally—whether navigating a government office, browsing retail displays, or engaging with public services. This technology reveals how people respond to environments, signage, products, and interactions in the moment—helping researchers and designers create more intuitive, human-centered experiences.

Respiration Monitoring

Breathe in the data.

Our respiration technology tracks breathing rate, depth, and variability in real time—revealing how emotional and cognitive states shift under pressure.

- Ideal for stress testing, emotional response studies, and cognitive workload analysis

- Measure subtle changes in breath to assess focus, tension, and resilience

- Capture physiological responses in real-world or high-performance environments

Using discreet respiration sensors, we explore how breathing patterns reflect mental and emotional strain, from the boardroom to the training field. By decoding these signals, we help organisations and researchers design healthier, more sustainable performance strategies.

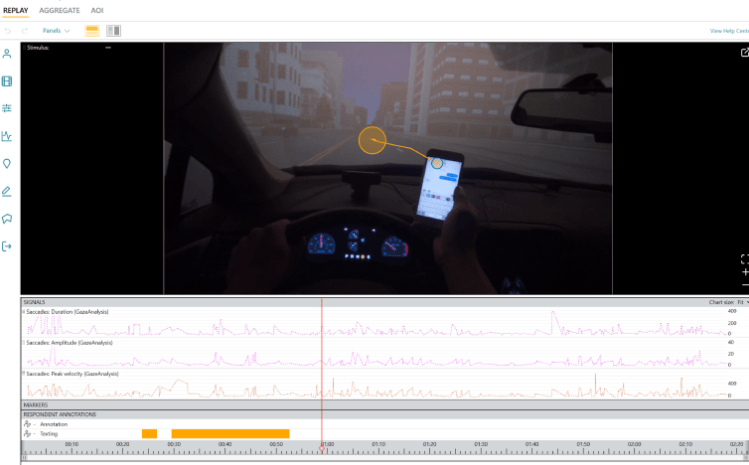

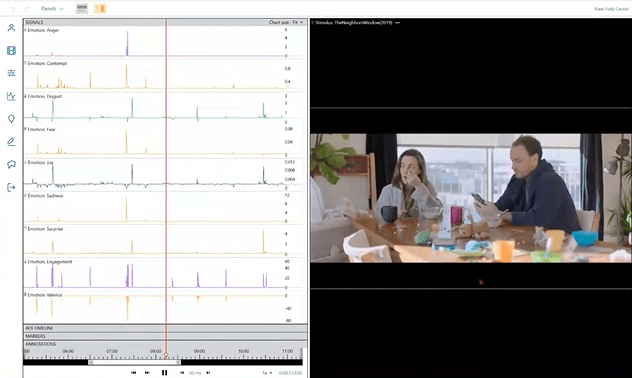

Facial Expression Analysis

See emotion in motion.

Our facial expression analysis technology captures subtle, real-time shifts in facial movement to reveal how people truly feel in response to content, experiences, or decisions.

- Perfect for emotional response studies, media testing, and UX research

- Decode micro-expressions and facial cues to uncover mood, engagement, and sentiment

- Measure emotional reactions non-invasively, in real-world or controlled environments

Using AI-powered facial recognition, we map emotional responses to everything from digital ads and legal messaging to public service campaigns and product design. It’s not just about what people say—it’s about how they feel. Combined with our biosensors, FEA delivers a powerful, multi-modal view of human decision-making and emotional impact.

Voice Analysis

Hear what words can’t say.

Our advanced voice-analysis technology decodes the emotional and cognitive signals hidden in pitch, tone, pace, and vocal tension, revealing what’s really going on beneath the surface.

- Track sentiment, train customer service teams, and assess credibility with precision

- Detect stress, engagement, and persuasion cues through vocal pattern analysis

- Capture and process speech in real time, in the lab or in the field

Powered by AI, our voice analytics reveal how tone and cadence shape trust, influence, and decision-making. Whether it’s interview simulations, parliamentary speeches, or remote interviews, voice data helps businesses, policymakers, and legal professionals sharpen their message and elevate communication. Paired with our biosensors, it delivers a complete, multi-modal view of human response.

GenAI

Imagine the Future — Then Create It with GenAI.

Our generative AI research empowers people and communities to see new possibilities, change behaviours, and shape a better society. We use AI-driven insights to uncover pathways to wellbeing, equity, and opportunity.

- Visualise positive futures — from healthy lifestyle choices to sustainable behaviours — and inspire action through immersive storytelling.

- Help kids see themselves in non-traditional roles with AI-generated role models, personalised career pathways, and future-ready skill exploration.

- Design campaigns that drive change, using AI to forecast behavioural trends and test interventions before they’re launched.

- Integrate behavioural science and governance to ensure AI is ethical, inclusive, and built for human good.

Powered by AI, our voice analytics reveal how tone and cadence shape trust, influence, and decision-making. Whether it’s interview simulations, parliamentary speeches, or remote interviews, voice data helps businesses, policymakers, and legal professionals sharpen their message and elevate communication. Paired with our biosensors, it delivers a complete, multi-modal view of human response.